The word crisis is no longer sufficient to describe mental health in the UK. We're long past that now, and what we face today is a reckoning. Since the pandemic, over 500,000 people have left the workforce citing mental health issues as the cause, up by a staggering 25%. Fears of a workforce exodus are fueling inflationary pressures already at a 40-year high. At the same time, the UK continues to be the only major developed country with employment below pre-pandemic levels heading into 2023.

As the demand for mental health services continues to outstrip supply, recent announcements by the Chancellor have alarmed mental health services across the country as they may be first in line to face cuts to an already skeletal service.

When the mental health of the population and the economy are so deeply intertwined, how exactly do we do more with less?

Mental health chatbots may raise a few eyebrows at first. But, struggling services could use chatbots to reduce clinic appointment times and offer patients respite from worsening symptoms of depression and anxiety.

Mental health chatbots depend on natural language processing to understand the context and intent of a question and respond to it. It uses machine learning to learn from large health data sets and continuously improves their performance and response.

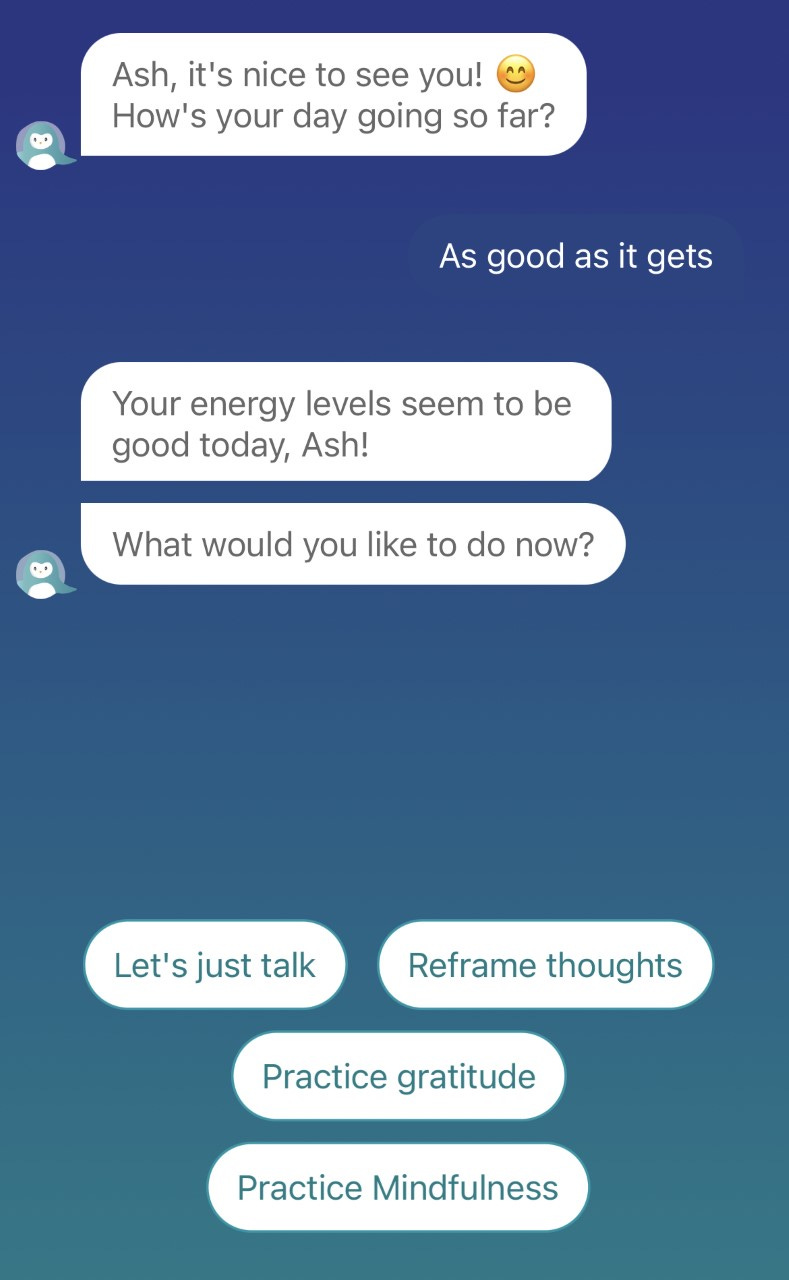

Both Wysa and Woebot, the leading mental health chatbot companies, use a text-based conversation interface with dialogue primarily controlled and led by the chatbots.

Despite the apprehension and stigma, more people accept AI chatbots if they do not try to pass as humans but establish their artificial nature immediately. Wysa's chatbot uses a cartoon penguin as its avatar and immediately acknowledges its AI nature during a conversation.

If you're not convinced, ChatGPT, another type of natural language processing chatbot, has taken the internet by storm with its uncanny ability to write in-depth essays on Hamlet and summarise the history of the Napoleonic wars in a Shakespearian sonnet (honestly, I've tried). Natural language agents are poised to disrupt many fields, and Psychiatry is one of them.

How would it work?

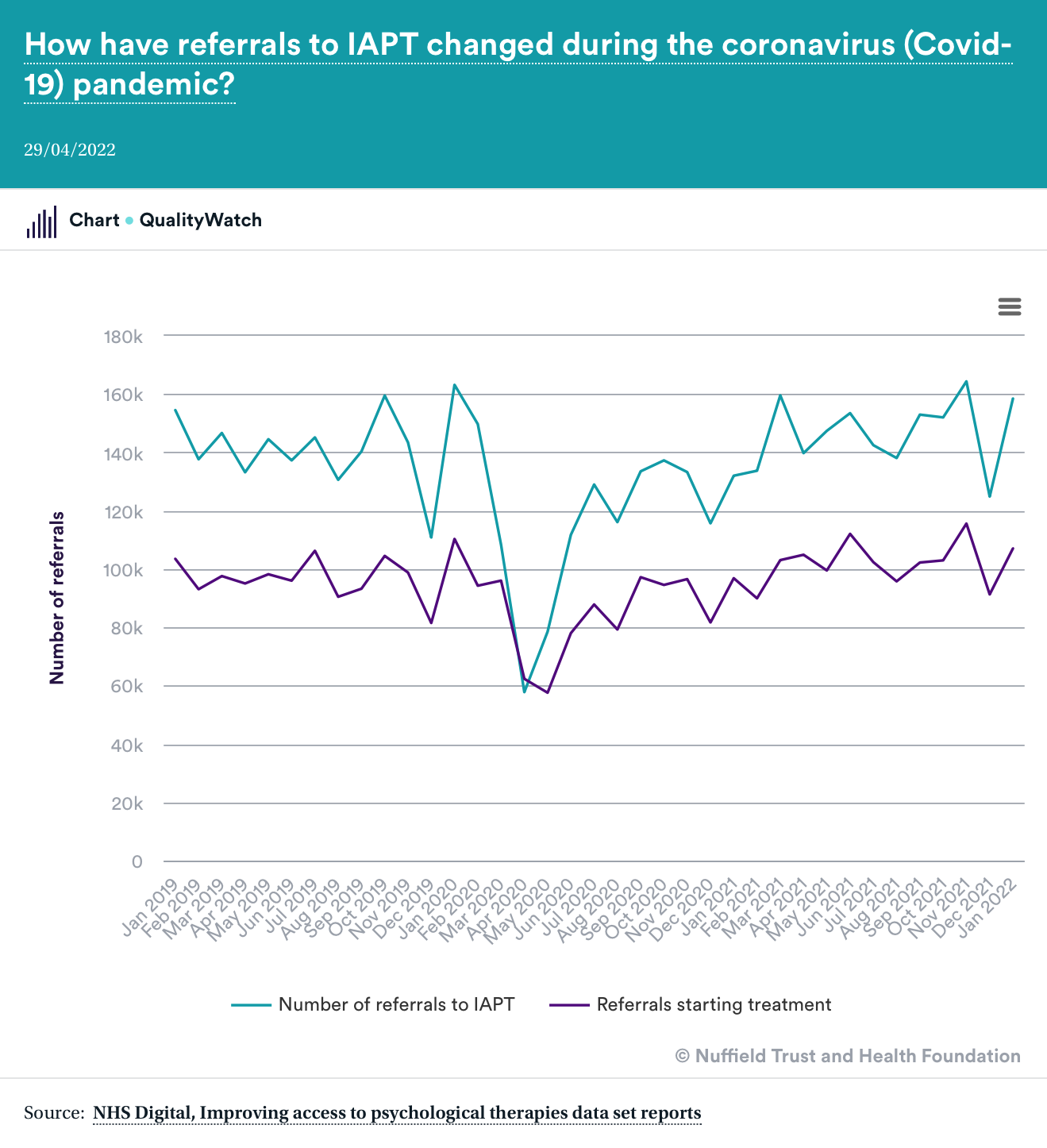

Improved access to psychological therapies (IAPT) offer evidence-based talking therapies as first-choice interventions for common mental health conditions such as depression and anxiety. A patient can be referred to IAPT by their GP or self-refer.

Mental health chatbots can provide online cognitive behavioural therapy (CBT) via a web-based or mobile-based app to patients on IAPT waiting lists before seeing a therapist face to face.

One of the most significant advantages of this technology is the potential to reduce symptoms of anxiety and depression as patients wait for an appointment. Currently, over 50% of people referred to IAPT wait over 23 weeks to receive an initial assessment and a secondary follow-up session (when the treatment begins). Delays in treatment will only worsen mental health outcomes and lead to higher hospitalization rates.

Suppose mental health chatbots can prove it reduces the severity of symptoms. In that case, they could be pivotal in saving the NHS time and money by capturing patients before reaching crisis point and providing therapists with more focused appointments, reducing clinic wait times.

Another clear advantage is the availability of a 24/7 chatbot service before, during and after therapy which may provide psychological support that keeps patients from dropping out of the service.

Due to the extensive waiting times, dropout rates from IAPT treatment have peaked at over 100,000 patients per year, contributing to the vicious cycle of patients waiting for treatment, dropping out and going to A&E in desperation.

Why are patients waiting so long?

A decade of poor workforce planning has meant rising demand for mental health services has yet to be matched by similar increases in mental health staffing. The number of mental health nurses has actually declined by 5 % since 2010, and the perception (reality) of low pay and high levels of burnout – mental health trusts have the second highest rate of sickness absence in the NHS- makes the career less and less attractive.

Can mental health chatbots really work?

Research on mental health chatbots is nascent. A recent systematic review scoping the evidence base behind chatbot usage found that more than half of the studies (30/53) had a sample size of less than 50.

Fewer than half the studies examining clinical effectiveness (14/33) used a Randomised controlled trial, and the evidence base for chatbots reducing depression was very weak (due to a high level of bias) and found to be not clinically important. The results of chatbots reducing anxiety were even less impressive.

What about safety?

From 2 RCT'S that assessed safety, both reported the chatbot as safe, and users did not have adverse events (suicide attempts, worsening of depression/anxiety, death) or discomfort.

But what would happen if a patient expressed suicidal ideation to the chatbot? Would it be possible to pick this up and refer to the appropriate channels?

Do these results spell the end of AI chatbot use in mental health?

It does paint a worrying picture of over-celebrating innovation without due diligence.

But you shouldn't dismiss AI chatbots just yet.

The market is ripe for an innovator demonstrating impact. Wysa, an Indian-based AI chatbot company with offices in London, is conducting a £ 1 million pound-funded RCT at the Central and North West London NHS foundation trust. They are out to prove prescribing AI CBT at the point of IAPT referral reduces symptoms of anxiety and depression.

The results will be out next year.

Wysa has conducted 13 peer-reviewed trials in a little over a year to prove the superiority of its chatbot over competitors. The latest peer-reviewed study showed people using the app developed a therapeutic alliance with the chatbot within five days.

The therapeutic alliance is one of the most robust mechanisms of change in psychotherapy interventions. Wysa's findings have justified their implementation across 17 NHS trusts, including Dorset and Southampton. The app is the top-rated digital mental health intervention in the NHS and has recently become completely integrated with the Singaporean healthcare system.

So, are we ready for mental health chatbots?

Mental health has to adopt innovation. And quickly. Because if we don't, what's the alternative? Maintaining the status quo will mean hundreds of thousands of patients facing delayed treatment, worsening outcomes and many more millions not working due to ill mental health.

The time for AI chatbots is now dawning upon us. Its widespread implementation will likely be in the next year or two if the clinical trials in London demonstrate Wysa as being clinically effective and safe.

Clinical robustness is still a lagging issue in health technology, with most clinical trials conducted by a small minority of companies primarily focusing on clinical efficacy.

But the rewards for those that focus on evidence are clear to see.

Wysa is quickly becoming the market leader for mental health chatbots by conducting high-quality trials and taking advantage of the clear evidence gap in digital mental health, giving Wysa a key differentiator over its competitors through demonstratable impact.

Last month, the world's largest workers' compensation insurer, travelers, has recently announced the launch of Wysa's return to work app, designed to support a return to work for injured employees who face psychosocial barriers to recovery. Pilot studies have shown injured workers using the app reduced the number of missed work days by a third compared to those not using the app.

There is huge economic utility in getting people the help they need when they actually need it.

As we speed ahead to an uncertain future, can we really afford to ignore digital solutions which offer (or will offer) a credible way to increase cost efficiency savings and help clinicians improve their everyday practice? Or will we let people languish in unemployment as their mental health worsens and provide no credible solutions?

If we want to go beyond platitudes about mental health being important, confining technologies to the corner of the toolbox will no longer do. We have to be ready to accept that there will be no such thing as business as usual.

Really interesting post Ash

Hi Ash - I just wanted to find out more about ChatbotGPT --my husband is maybe a little obsessed with it. But I asked the AI about AI in mental health situations. Here is the answer:

"Artificial intelligence (AI) has the potential to enhance mental health care by providing additional support and resources for patients and clinicians. For example, AI-powered tools such as chatbots and virtual assistants can be used to provide mental health information and support, as well as to triage patients and connect them with appropriate care. AI can also be used to analyze patient data and provide personalized treatment recommendations to clinicians.

However, it is important to recognize that AI is not a replacement for human care and that it is not appropriate for every situation. It is important for mental health care providers to consider the unique needs and preferences of each individual patient and to use a combination of human and technological resources to provide the most effective care. It is also important to ensure that AI-powered tools are designed and implemented ethically, with the privacy and well-being of patients being a top priority."

I'm mostly amazed that Chatbot was so quick to answer. Your example about Woebot's inappropriate response was chilling. I think for diagnosis and treatment suggestions on a very basic level, AI can work. Thanks for your article!